LLM Chat

This feature is currently in BETA, which means:

- We reserve the right to make breaking changes without prior notice.

- You might lose the chat's history when resetting to an old entity revision. If this happens, you can solve the associated error by clearing the chat's history.

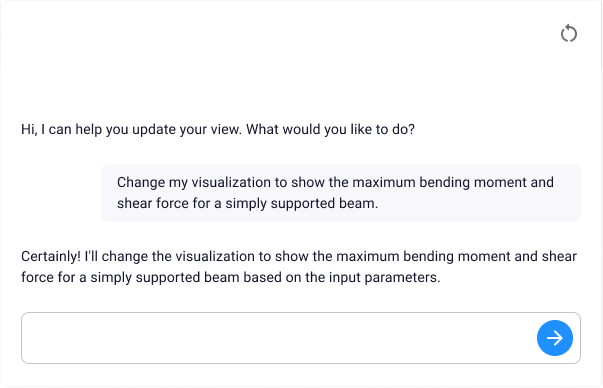

A Chat enables the user to have a conversation with an LLM from within the app editor.

import viktor as vkt

class Parametrization(vkt.Parametrization):

chat = vkt.Chat("Chatbot", method="call_llm")

class Controller(vkt.Controller):

...

def call_llm(self, params, **kwargs):

conversation = params.chat

messages = conversation.get_messages()

... # here code is added to call the LLM

return vkt.ChatResult(...)

The Controller accesses user messages by calling the get_messages method on the ChatConversation object obtained from params.chat

get_messages can be used to get the full history of the conversation, which returns a list of messages with format, where 'role' indicates who sent the message (user or assistant):

{

'role': str, # user | assistant

'content': str, # the actual message

}

The current state of the conversation is sent to the LLM and retrieves its response, which is returned from the Chat method within a ChatResult.

Before the controller can pass the retrieved conversation history to any LLM client, the application must have access to a valid API key. The following section will explain how this is done for the different providers

API key configuration

Integrating a Large Language Model (LLM) into a VIKTOR application requires an API key from the chosen LLM provider. This key must be securely stored and made accessible to the application using environment variables.

Obtaining an API key

API keys are obtained directly from the respective LLM provider. The table below provides links to pages where you can find or generate your key toeg:

| Provider | API key link | Safety |

|---|---|---|

| OpenAI (GPT) | API keys | Safety tips |

| Anthropic (Claude) | API keys | Key safety |

| Google (Gemini) | API keys | Security |

Setting API keys as environment variables

You can set environment variables directly when running VIKTOR CLI commands using the --env or -e flag when running the install, start and run commands.

For example, to start your app with a specific OpenAI API key:

viktor-cli start --env OPENAI_API_KEY="your-api-key"

Replace "your-api-key" with the actual API key you want to use for this session. The CLI will connect the local app code to your VIKTOR environment as usual, but with the addition that the environment variable has been set on the running Python process.

Accessing API keys in application code:

Environment variables can be accessed within app.py or other relevant Python modules using the built-in os module in combination with the os.getenv() function.

# app.py

import viktor as vkt

import os

# Example: Accessing OpenAI API Key

OPENAI_API_KEY = os.getenv("OPENAI_API_KEY")

# OPENAI_API_KEY is now available for use with LLM clients if set in the environment

class Parametrization(vkt.Parametrization):

chat = vkt.Chat("Chatbot", method="call_llm")

class Controller(vkt.Controller):

parametrization = Parametrization

def call_llm(self, params, **kwargs):

conversation = params.chat

messages = conversation.get_messages()

... # here code is added to call the LLM

return vkt.ChatResult(...)

Calling the LLM from the controller

With the correct API key set up, we can connect the app with the actual LLM.

A multi-turn chat workflow can be implemented for each of the main LLM providers as shown below. Each provider requires the installation of its corresponding Python client and may need specific handling of the chat history to meet its role formatting requirements (e.g., user, assistant).

- Anthropic Client

- OpenAI Client

- Gemini Client

To use the Anthropic client, you need to add the anthropic Python library to your requirements.txt file and install it for your app.

import os

import viktor as vkt

from anthropic import Anthropic # don't for get to add in requirements.txt and re-install

client = Anthropic(api_key=os.getenv("ANTHROPIC_API_KEY"))

class Parametrization(vkt.Parametrization):

chat = vkt.Chat("LLM Chat", method="call_llm")

class Controller(vkt.Controller):

parametrization = Parametrization

def call_llm(self, params, **kwargs):

conversation = params.chat

if not conversation:

return None

response = client.messages.create(

model="claude-3-5-sonnet-latest",

max_tokens=1024,

messages=conversation.get_messages(),

system="You are a helpful assistant that responds using simple and formal British English."

)

return vkt.ChatResult(conversation, response.content[0].text)

For streaming responses, the LLM client yields parts of the response as they are generated, rather than waiting for the complete response. This can improve perceived performance for the user. The ChatResult can accept an iterable of strings for streaming.

import os

import viktor as vkt

from anthropic import Anthropic # don't for get to add in requirements.txt and re-install

class Parametrization(vkt.Parametrization):

chat = vkt.Chat("LLM Chat", method="call_llm")

client = Anthropic(api_key=os.getenv("ANTHROPIC_API_KEY"))

class Controller(vkt.Controller):

parametrization = Parametrization

def call_llm(self, params, **kwargs):

conversation = params.chat

if not conversation:

return None

llm_response_stream = client.messages.create(

model="claude-3-5-sonnet-latest",

max_tokens=1024,

messages=conversation.get_messages(),

system="You are a helpful assistant that responds using simple and formal British English.",

stream=True,

)

text_stream = map(

lambda chunk: chunk.delta.text,

filter(

lambda chunk: chunk.type == "content_block_delta" and chunk.delta.text,

llm_response_stream

)

)

return vkt.ChatResult(conversation, text_stream)

To use the OpenAI client, you need to add the openai Python library to your requirements.txt file and install it for your app.

When using OpenAI's chat completion API, prepend a 'system' message to the history manually, as VIKTOR’s ChatConversation does not include the 'system' role by default.

import viktor as vkt

import os

from openai import OpenAI # don't for get to add in requirements.txt and re-install

client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

class Parametrization(vkt.Parametrization):

chat = vkt.Chat("LLM Chat", method="call_llm")

class Controller(vkt.Controller):

parametrization = Parametrization

def call_llm(self, params, **kwargs):

conversation = params.chat

if not conversation:

return None

vkt_messages = conversation.get_messages()

openai_messages = [

{

"role": "system",

"content": "You are a helpful assistant that responds with simple but formal British English.",

}

]

openai_messages.extend(vkt_messages)

completion = client.chat.completions.create(

model="gpt-4.1", messages=openai_messages

)

return vkt.ChatResult(params.chat, completion.choices[0].message.content)

For streaming responses, the LLM client yields parts of the response as they are generated, rather than waiting for the complete response. This can improve perceived performance for the user. The ChatResult can accept an iterable of strings for streaming.

import viktor as vkt

import os

from openai import OpenAI # don't for get to add in requirements.txt and re-install

client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

class Parametrization(vkt.Parametrization):

chat = vkt.Chat("LLM Chat", method="call_llm")

class Controller(vkt.Controller):

parametrization = Parametrization

def call_llm(self, params, **kwargs):

conversation = params.chat

if not conversation:

return None

vkt_messages = conversation.get_messages()

openai_messages = [

{

"role": "system",

"content": "You are a helpful assistant that responds with simple but formal British English.",

}

]

openai_messages.extend(vkt_messages)

stream = client.chat.completions.create(

model="gpt-4.1",

messages=openai_messages,

stream=True,

)

# Stream text from the event chunks

text_stream = (

chunk.choices[0].delta.content

for chunk in stream

if chunk.choices[0].delta.content is not None

)

return vkt.ChatResult(params.chat, text_stream)

To use the Gemini client, you need to add the google-genai Python library to your requirements.txt file and install it for your app.

Google's Gemini client handles multi-turn conversations using specific types like UserContent, ModelContent, and Part. You'll need to convert the VIKTOR message format ({'role': str, 'content': str}) into this structure for the chat history.

import os

import viktor as vkt

from google import genai # don't for get to add in requirements.txt and re-install

from google.genai import types

client = genai.Client(api_key=os.getenv("GEMINI_API_KEY"))

def viktor_messages_to_genai(messages):

history = []

for msg in messages:

role = msg.get("role")

text = msg.get("content", "")

if role == "user":

history.append(types.UserContent(parts=[types.Part(text=text)]))

elif role == "assistant":

history.append(types.ModelContent(parts=[types.Part(text=text)]))

return history

class Parametrization(vkt.Parametrization):

chat = vkt.Chat("Chatbot", method="call_llm")

class Controller(vkt.Controller):

parametrization = Parametrization

def call_llm(self, params, **kwargs):

conversation = params.chat

if not conversation:

return None

messages = conversation.get_messages()

history_msgs = messages[:-1]

user_msg = messages[-1]

history = viktor_messages_to_genai(history_msgs)

system_instruction = "You are a helpful assistant that responds with simple but formal British English"

chat_session = client.chats.create(

config=types.GenerateContentConfig(

system_instruction=system_instruction,

temperature=0.5,

),

model="gemini-2.0-flash",

history=history

)

response = chat_session.send_message(user_msg["content"])

return vkt.ChatResult(params.chat, response.text)

For streaming responses, the LLM client yields parts of the response as they are generated, rather than waiting for the complete response. This can improve perceived performance for the user. The ChatResult can accept an iterable of strings for streaming.

import os

import viktor as vkt

from google import genai # don't for get to add in requirements.txt and re-install

from google.genai import types

client = genai.Client(api_key=os.getenv("GEMINI_API_KEY"))

def viktor_messages_to_genai(messages):

history = []

for msg in messages:

role = msg.get("role")

text = msg.get("content", "")

if role == "user":

history.append(types.UserContent(parts=[types.Part(text=text)]))

elif role == "assistant":

history.append(types.ModelContent(parts=[types.Part(text=text)]))

return history

class Parametrization(vkt.Parametrization):

chat = vkt.Chat("Chatbot", method="call_llm")

class Controller(vkt.Controller):

parametrization = Parametrization

def call_llm(self, params, **kwargs):

conversation = params.chat

if not conversation:

return None

messages = conversation.get_messages()

history_msgs = messages[:-1]

user_msg = messages[-1]

history = viktor_messages_to_genai(history_msgs)

system_instruction = "You are a helpful assistant that responds with simple but formal British English"

chat_session = client.chats.create(

config=types.GenerateContentConfig(

system_instruction=system_instruction,

temperature=0.5,

),

model="gemini-2.0-flash",

history=history

)

chunk_iter = chat_session.send_message_stream(user_msg["content"])

text_stream = (chunk.text for chunk in chunk_iter)

return vkt.ChatResult(params.chat, text_stream)

Publishing an app

For published applications, API keys can be set, updated, or removed from the 'Apps' section in your VIKTOR environment. Once configured, these environment variables are securely stored and available in the application’s code during runtime. This replaces the use of .env files or passing keys through the VIKTOR CLI for deployed apps.

Detailed guidance is available in the VIKTOR environment variables documentation.

More info

The technical API reference, can be found here: Chat

Expand to see all available arguments

In alphabetical order:

-

first_message (optional): the first message that will appear on top of the empty conversation

chat = vkt.Chat(..., first_message="How can I help you?") -

flex (optional): the width of the field between 0 and 100 (default=33)

chat = vkt.Chat(..., flex=50) -

method (required): the controller method that will be triggered when the user clicks the "send" button

chat = vkt.Chat(..., method='call_llm') -

placeholder (optional): placeholder message in the user prompt

chat = vkt.Chat(..., placeholder="Ask anything") -

visible (optional): can be used when the visibility depends on other input fields

chat = vkt.Chat(..., visible=vkt.Lookup("another_field"))See 'Hide a field' for elaborate examples.

Limitations

The VIKTOR SDK currently supports only the 'user' and 'assistant' roles for messages within the ChatConversation. For other roles (e.g., 'system', 'tool'), additional processing may be required depending on the specific LLM client being used.

The maximum response size in the ChatResult is 75000 characters.